1. Deficiency of existing methods composed with deep learning arthitectures.

- Ignoring a major class of CF(collaborative flitering) models, neighborhood or memory-based approaches.

2. CMN Model

Three parts

- internal user-specific memory

- internal item-specific memory

- collective neighborhood state

User Embedding

- $m_u$ is target user embeding, $q_{uiv}$ is a scalar which size represents similarity between $u$ and $v$, $i$.

Neighborhood Attention

- Traditional neighborhood methods predefine a heuristic weighting function such as Pearson correlation or cosine similarity and require specifiying the number of users to consider, Neighborhood Attention needn’t doing this, just compute the attention weights for a given user to infer the importance of each user’s unique contribution to the neighborhood:

- Finially construct the neighborhood attention representation by interpolating the external neighborhood memory with the attention weights:

- ($c_v$ is anthor embedding vector for user $v$; $C$ with the same dimensions as $M$; $o_{ui}$ represents a weighted sum of neighborhood composed of relations between the specific user, item and the neighborhood.)

Output Model

- The output model smoothly integrates a nonlinear interaction between the local collective neighborhood state and the global user and item memories, while existing method lack of the nonlinear interaction between the two items, limiting the extent of captured relations.

- For a given user $u$ and item $i$ the ranking score is given as:

- (where $\odot$ is elementwise product; $v,b\in \mathbb{R}^d$; and $U,W \in \mathbb{R}^{d*d}$ are parameters to be learned; $\varnothing$ is a nonlinear activation function, empirically the rectified unit(ReLU) $\varnothing(x) = max(0,x)$ to work best due to its nonsaturating nature and suitability for sparse data.)

Multiple Hops

- The hops help to look back and reconsider the most similar users to infer more precise neighborhood.

- The model apply a nonlinear prejection between hops:

- The initial query $z_{ui}^0 = m_u + e_i$.

- The user preference $z_{ui}^h$ is used to solicit the user neighborhood.

- The output model receives the weighted neighborhood vector from the last hop $H^{th}$ to produce the final recommendation.

3. Experimant

Code sources

Enviroment

Linux

Python 3.6

TensorFlow 1.4+

dm-sonnet # python module

Model parameters

{

"batch_size": 128,

"decay_rate": 0.9,

"embed_size": 50,

"filename": "/media/files/szq/CMN/data/citeulike-a.npz",

"grad_clip": 5.0,

"hops": 2,

"item_count": "16980",

"l2": 0.1,

"learning_rate": 0.001,

"logdir": "result/011/",

"max_neighbors": 311,

"neg_count": 4,

"optimizer": "rmsprop",

"optimizer_params": "{'momentum': 0.9, 'decay': 0.9}",

"pretrain": "/media/files/szq/CMN/pretrain/citeulike-a_e50.npz",

"save_directory": "result/011/",

"tol": 1e-05,

"user_count": "5551"

}

Datasets frame

- This code approach on citeulike-a dataset, which collected from CiteULike, an online service provides user a digital catalog to save and share academic papers. User preferences are encoded as 1 if the user has save the paper(item) in their library.

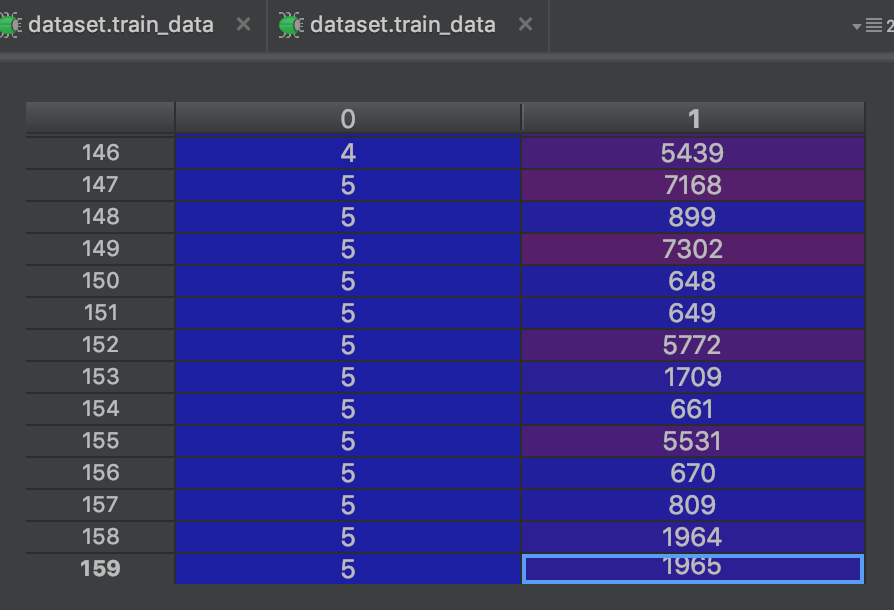

- The format of train data and test data have been processed as follows:

We can see both the train data and the text data are matrix of $N*2$, $N$ means the number of the data; meanwhile the first column is user’s id, the second is paper’s(item) id. Emphasize again User preferences are encoded as 1 if the user has save the paper(item).

We can see both the train data and the text data are matrix of $N*2$, $N$ means the number of the data; meanwhile the first column is user’s id, the second is paper’s(item) id. Emphasize again User preferences are encoded as 1 if the user has save the paper(item).